It's been 5 years give or take since my last blog post. Pretty strong evidence that I'm not the type that needs to get things off my chest or down on paper. Still, I've volunteered to run a quick workshop at work and I need a format that'll help me think through what to present and how to present it.

I'm reminded of my last post back in August of 2015. Troy Hunt, and a number of other highly successful people, create content with the intent to re-purpose it. A tweet that becomes a blog post that becomes a workshop. Each subsequent refinement requires effort but it's never starting from scratch. And they've been road-tested in a less time intensive format before converting into something that'll take serious commitment.

So, let's say that we've got an organization with a number of developers interested in Git but haven't really worked extensively with it. They're all quick learners but most are working with Team Foundation (TF) for version control so many of the concepts will be foreign. What concepts does Git bring to the table?

The high points that come to mind are:

- Local branching

- Rewriting history like with rebase

- Pull based merging

- Graph database used to store commits

- Garbage collection of orphaned commits

- Remote repositories

- Stash for handy changes that I want applied locally but don't want to share with others

Stories

Telling stories is important in a presentation. And my goal is to use stories to lead my audience to certain conclusions, to educate, and to inspire. So what can I share in ~45 minutes that really hooks them and gives them the confidence to learn more? What can I do to help them become better by using what I think is a better tool?

I need a story arc. The classic hero's journey. We need to start at a place that ain't bad. Things take a turn for the worse. They hit rock bottom then start getting better. The hero triumphs and ends up better than where they started. How do I do that with source control?

I took that journey. I was using TF for version control and I could do my job. It sometimes sucked to have 20 or more files with local edits. Merge conflicts were sometimes a train wreck if the branch had drifted far away from the trunk. I could not switch context from story to story; instead I'd have to take the time to create a shelveset (too much work) or just have multiple work streams all joined together and do my best not to cross the streams.

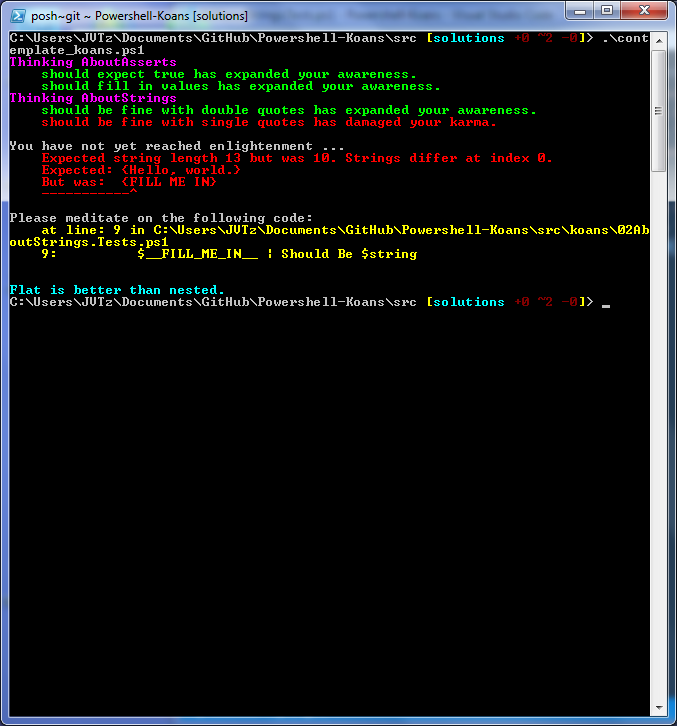

Somewhere around 2014 I was curious about Git and started googling around. I wanted a clean path to migrate a TF repository to a Git repo. I found the Git-TFS and better yet it had a Chocolatey package (link). I migrated our repo through trial and error then set about finding how to use my new tool-chain productively. Heck, in the worst case I'd learn a lot about Git and how it doesn't work for me.

Phil Haack had some excellent blog posts about how to create aliases in Git. I took about half of his scripts and re-wrote them to work with TFS. A lot of Phil's other blog posts helped me think about Git flow, Github flow, and what I'd eventually think of as Git-TFS flow.

Git-TFS flow

Every day I pick up new work. There's a story on our storyboard that I want to do so I read the requirements then get to business. While I read I make sure I've got the latest source code from TF merged into my local Git trunk (e.g. git tfup).

[alias]

tfup = !git tfs pull --rebase --debug $@

I start by creating a new branch and switching to it (e.g. git cob ABC-1234). I name my branches after the story ID just to keep them straight.

cob = checkout -b

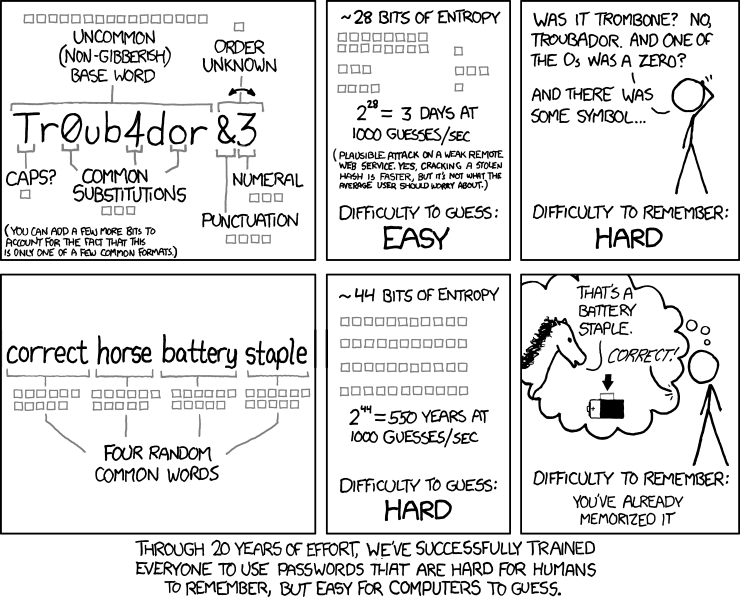

I write some code. Every time I get something working I try to commit it with a good commit message (e.g. git cm "Fixed another typo"). Commits are cheap in Git so adding more is almost always the right answer. If I decide that I'm down a bad path I can undo the commit and get back to my last known good (e.g. git undo; git wipe). The wipe command is especially nice because it creates a commit from all the current work and orphans it. Eventually Git will garbage collect and permanently erase it but for the next little bit I could find it on the reflog. Think of it as the recycle bin for commits.

cm = !git add -A && git commit -m

undo = reset HEAD~1 --mixed

wipe = !git add -A && git commit -qm 'WIPE SAVEPOINT' && git reset HEAD~1 --hard

Let's say I get stuck. I need to talk to the Business Analyst but they're in a meeting for the next 35 minutes. Do I just take a long coffee break? Nope. I just commit what I've got and switch branches to whatever else I've got up in the air. Or pick up a new story and try to get half way through it before switching back to higher priority work. With Git I'm able to switch context in a really clean way. And if I can't remember where I left off I can check history on the branch to jog my memory (e.g. git lga).

lga = log --graph --oneline --all --decorate --abbrev-commit

Now it's time to share some code. We like doing reviews of our work with the rest of the team. I want to be able to demonstrate work from several of my branches without having to force my colleagues to watch me type Git commands in and restart my IDE. Branching is cheap in Git so I'll create a branch just for my demo and merge in all of today's work (e.g. git cob demo-2020-02-06; git merge ABC-1234; git merge ABC-1237).

The demo went well and it's finally time to commit my work back to TF version control (e.g. git rct). This will get the latest commits, rebase so that my commits are sitting on top of the latest TFS code, then iterate through each of my commits turning them into TF commits and using tf.exe to ship them up. If someone on my team creates a new commit between when I start the process and when the TF commits are created then the tooling stops with an error message and I get to retry.

rct = !git tfup && git tfs rcheckin --debug

Want a summary of everything you did yesterday for your morning standup? I found this one on Twitter: git standup.

Here's the complete list of aliases from my .gitconfig:

[alias]

co = checkout

ec = config --global -e

up = !git pull --rebase --prune $@ && git submodule update --init --recursive

tfup = !git tfs pull --rebase --debug $@

ct = tfs ct

rct = !git tfup && git tfs rcheckin --debug

cob = checkout -b

cm = !git add -A && git commit -m

save = !git add -A && git commit -m 'SAVEPOINT'

wip = !git add -u && git commit -m "WIP"

undo = reset HEAD~1 --mixed

amend = commit -a --amend

wipe = !git add -A && git commit -qm 'WIPE SAVEPOINT' && git reset HEAD~1 --hard

bclean = "!f() { git branch --merged ${1-master} | grep -v " ${1-master}$" | xargs -r git branch -d; }; f"

bdone = "!f() { git checkout ${1-master} && git up && git bclean ${1-master}; }; f"

migrate = "!f(){ CURRENT=$(git symbolic-ref --short HEAD); git checkout -b $1 && git branch --force $CURRENT ${3-'$CURRENT@{u}'} && git rebase --onto ${2-master} $CURRENT; }; f"

userstats = shortlog -sne

lga = log --graph --oneline --all --decorate --abbrev-commit

standup = "!f() { USERNAME=$(git config user.name); if [ $(date +%u) -eq 1 ]; then git --no-pager lga --since=\"last friday\" --author=\"$USERNAME\"; else git --no-pager lga --since=\"1 day ago\" --author=\"$USERNAME\"; fi; }; f"